Dialing in LLM Costs for Website and Content Analysis

Types of Costs

Using LLMs has costs:

Money costs. Some models are free (or you can run your own models locally for "free"), but commercial / frontier LLMs have a cost.

Processing time. LLMs are really slow compared to other automation techniques such as scraping. Especially if you are running across a large body of content this can be problematic.

Energy / environmental. Although it appears that models are becoming more and more energy efficient, there is the energy usage consideration.

Time formulating prompts. Although frontier models are getting much better at interpreting prompts, defining effective prompts takes time.

Privacy. Depending on how you are accessing an LLM, the information could be used for training models. Note: Chimera only uses paid API access to commercial LLMs which all LLM providers are currently saying will not be used for training.

Copyright issues. Models have been trained on data that the AI companies did not have permission from the content authors.

Cost Drivers

These are drivers of the first three types of costs above:

Iterations. I have written about the need for iteration in content analysis (see Rethinking the Content Inventory: Exploration) for a long time. One of the main reasons to create Content Chimera was to enable easier iteration and exploration. But if you run a relatively-expensive LLM prompt then running it multiple times (as you, for example, refine the prompt) can rack up the costs.

Content Count. The more pieces of content you analysis with an LLM, the more expensive it is.

On these first two bullets, in Chimera there are mechanisms to: a) test against specific URLs (that have already been preprocessed for you), b) sample against a set of content, and c) only then run against all relevant content (and also create a set of content assertions to test against as well) — so you kind of get to iterate a little bit on a small set before continuing onto the full relevant set.

Content Size. The more words (if sending text) you send to an LLM, the more input tokens you are going to use, and each input token has a cost. Each model has a limit on how many tokens can be input as well (Chimera ensures you don't go over this limit by truncating text).

Preprocessing. There are a variety of reasons you need to pre-process content before passing it to an LLM, with the most important probably being to limit the content size issue listed above. Some example preprocessing: a) only looking at the part of the page that's relevant (for instance, by XPath or other means) and b) converting the HTML to a smaller format that only shows the text seen by people using web browsers (Content Chimera does both of these types of preprocessing).

Prompt and Other Context Size. The prompt itself has a size, although the bigger cost can be any other context you send to an LLM. For instance, if you are using an LLM to define a chart, you need to send it information about potentially-useful (and available) fields. There are really two costs on the context you send: a) determining what context to send (for instance, which fields might be relevant) and b) the efficient size of that context. For example, Chimera uses a vector database to find what might be the appropriate fields in one step and then passes only those field definitions to an LLM.

Response Size. The default way that most people use LLMs is a very verbose output, which is useful in many use cases. But in general this is not useful in content analysis, which we want constrained values (for instance, if we want a list of topics for a page, then we only want the list of topics and not some extended explanation). This helps us be efficient on output tokens, which are generally more expensive than input tokens.

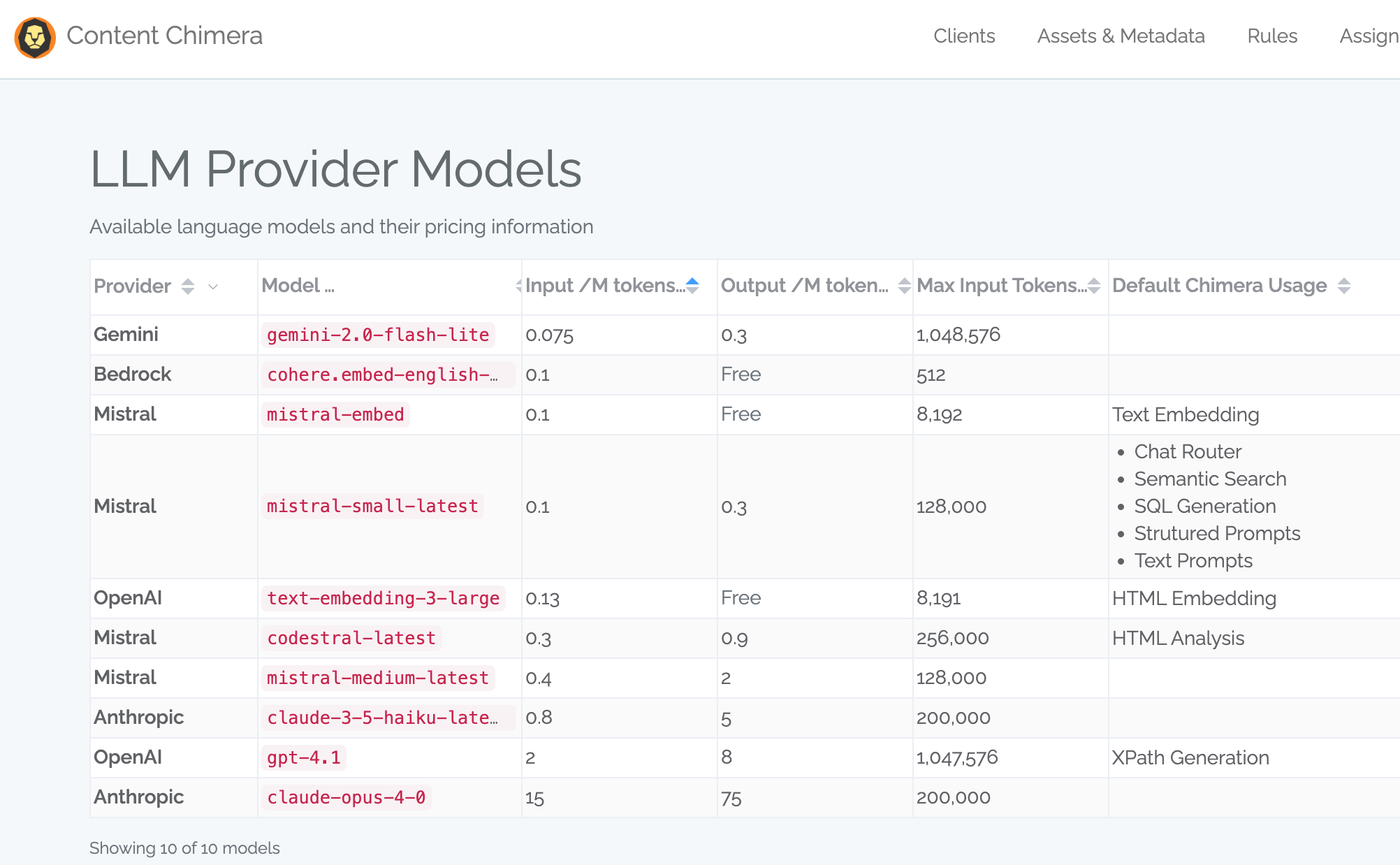

Model Selection. Some models are far slower than others, and some models are far more expensive than others. Furthermore, some models are much less expensive on input tokens than output tokens (and, in general, for content analysis we mostly burn input tokens). Of course there's a balance, where for some tasks you actually do need more expensive models (such as actual analysis of HTML). Chimera is pretty agnostic about model usage (although the model must support structured responses in most cases for content analysis), allowing different models from different models to be used. In general we have had great success with the Mistral Small model.

One Big Cost Lever

When it comes to the use case of using an LLM to categorize or extract information from content (so looking at a lot of content), the biggest cost lever you have is whether you:

Inexpensive option: Use a model to better automate, OR

Expensive option: Use a model to look at each piece of content.

Inexpensive option: Use a model to better automate

LLMs are extraordinary (certainly not perfect) at generating code and configuration information. You can leverage this fact for efficient categorization / extraction. For instance, instead of asking an LLM to extract the list of topics exposed on every page of your site, you can ask an LLM to define an XPath that can then be used to scrape that information out of every piece of content on your site. This is far less expensive assuming you have the right toolset in place.

Expensive option: Use a model to look at each piece of content

The knee-jerk way of using an LLM is to write a prompt, and run that prompt against every piece of content. Sometimes this may be the only way to automate a task. For instance, if there is now taxonomy on your site now and/or topics are not exposed in a consistent manner then you may need an LLM to look at every piece of content to figure out it's topic.