Use Cases for AI in Digital Presence and Content Analysis

First off, let me say that LLMs should NOT be used indiscriminately for digital presence and content analysis. They most certainly should not. In fact, if there's another way of doing it (such as scraping out values) then it should almost certainly be done the other way. That said, LLMs open up some interesting possibilities.

Useful things that were impossible before

Some things have been impossible in content analysis before.

Categorization based on text analysis

For years I have been investigating ways of doing categorization to things like topic.

A long time ago when I was at the World Bank the taxonomy team trained a system to categorize to topics, in a way that was more effective than human categorization. But that took a look of dedicated effort (and a volume of incoming content that justified it). So I have been on the lookout for this sort of thing.

In Chimera I have tried all sorts of packages over time, from spaCy to NLTK / Gensim and RAKE for categorization (and before that, things like Open Calais as well). These have always been disappointing for things like categorization, at least for doing this type of analysis in a generalized manner (without needing lots of tuning for a specific website for example). Over the last year, this has dramatically changed. Of course it isn't totally perfect, but it opens up all sorts of possibilities.

Types of categorization that could be promising, depending on the exact needs of a particular digital presence:

Topics.

Audience.

Tone.

Content Type. Sometimes templates are used as way of trying to get pages to look a certain way rather than what the actual content type is. An LLM can help categorize to what type of content the page is written as. This is also an excellent example of how an LLM most definitely should not be used if this information is already well structured and clear.

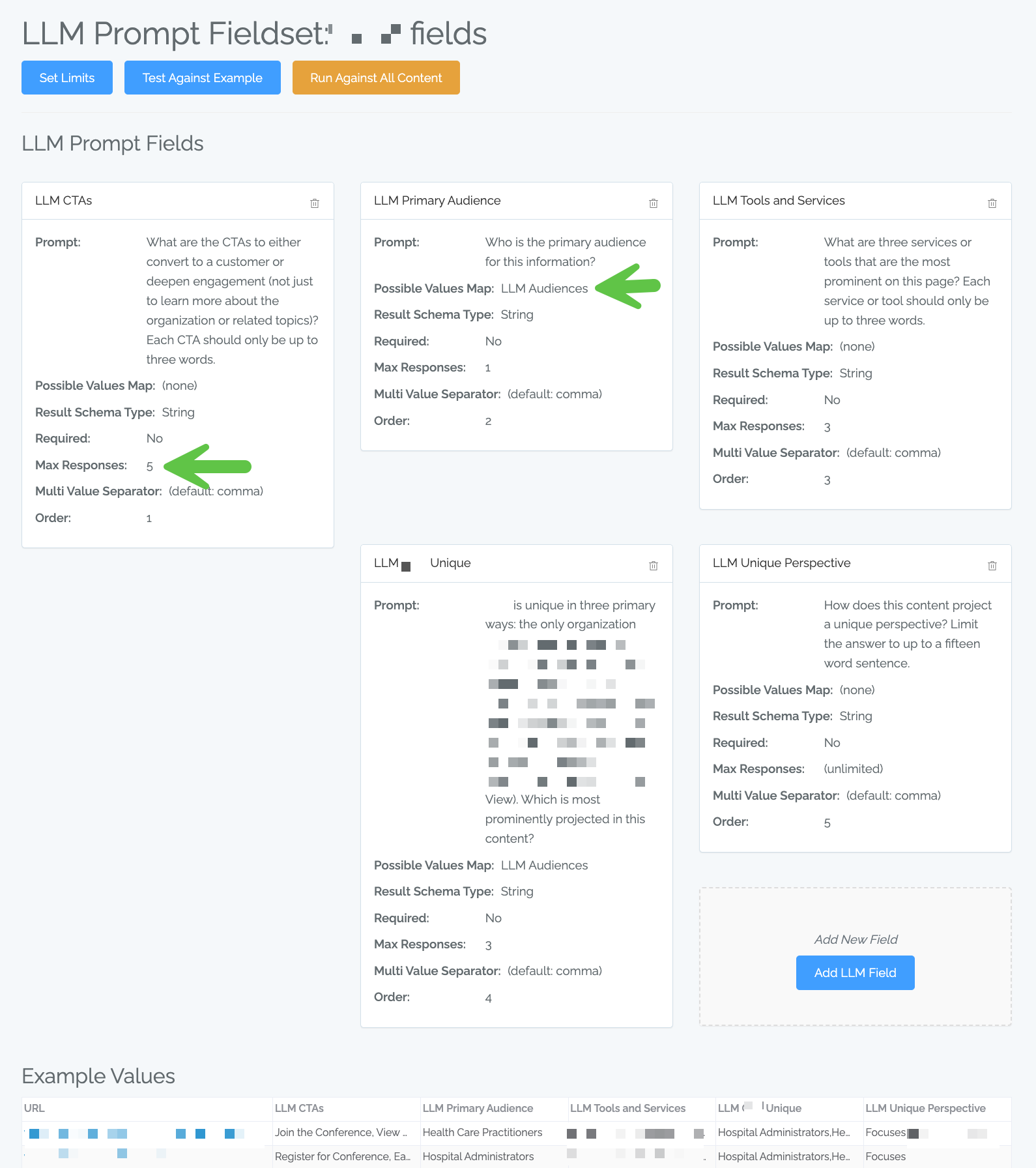

The example below (from Chimera, from the LLM Field Set definition page, where multiple fields are requested in a single prompt to save on costs) shows:

Example prompts (the system does add more to the prompt for context etc) for CTAs, Primary Audience, Tools and Services, and a couple fields about uniqueness (specific to that organization),

Defining how many possible responses per field

Is constraining to a specific possible list of audiences (the "LLM Audiences" Map)

Extraction from very inconsistent templates

Sometimes different sites or sections of sites show the same information (say, author, date, and title), it may presented in very different ways. Moreover, the issue may be that you don't know where things are consistent or not. An LLM can help query find those types of things to rationalize across inconsistent templates or template usage. In the above example, searching for CTAs is an example of this (although, in hindsight, there may have been some patterns we could have used instead).

Summarization

LLMs are fantastic at summarization. The "Unique Perspective" is a bit of an example of this. One option I've considered but not yet leveraged is asking for simple summaries of content that could then be used for a person to quickly scan through some content (especially if you are working with a site "blind" that you haven't dealt with before). This may also be useful for generating summaries for different-sized fields, such as the meta description.

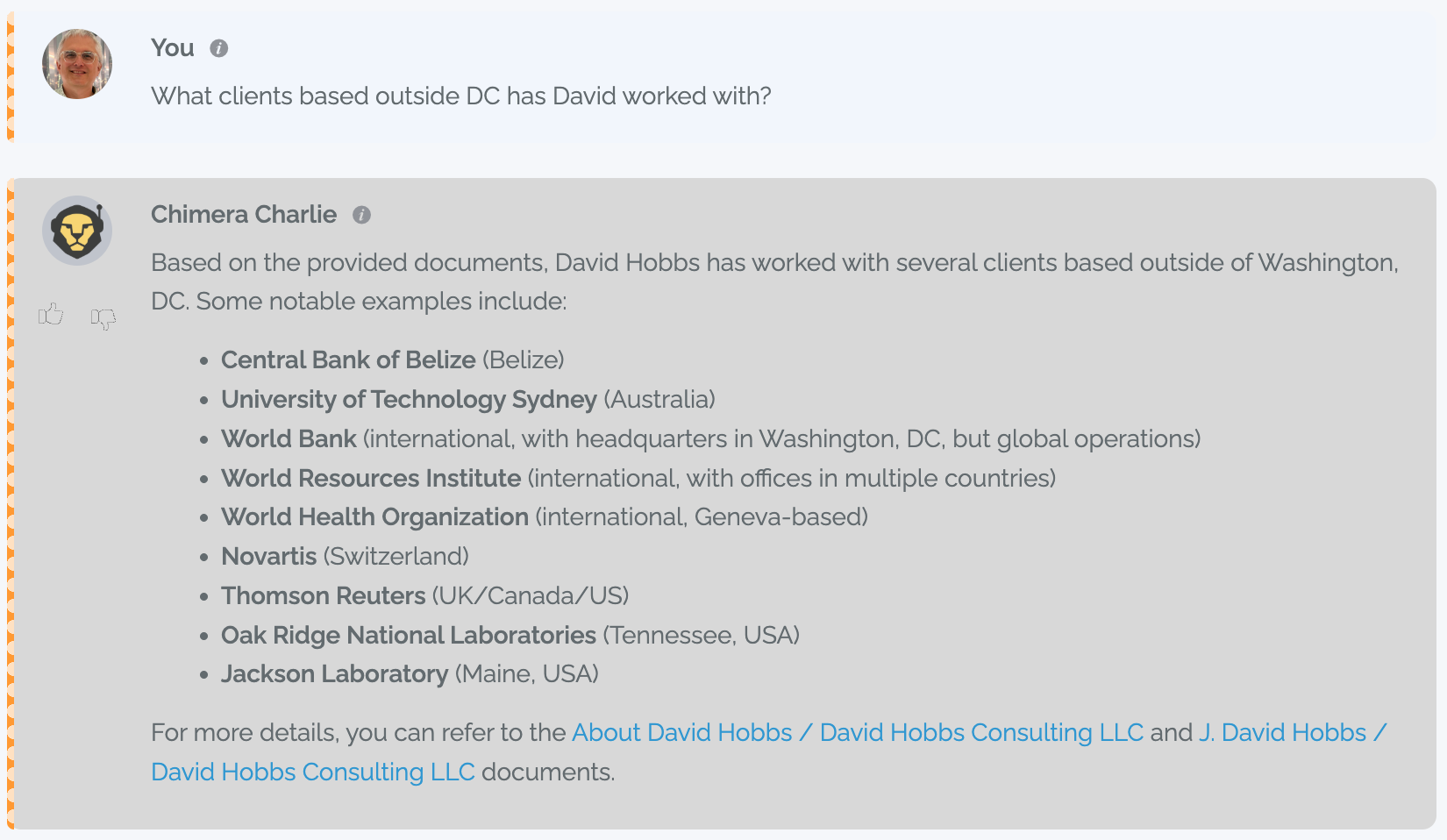

Semantic search

I have found semantic searches across a website to be useful. This allows us to ask questions about a website in a more useful manner, to just directly get the answer than having to do more research yourself via other means:

Move faster

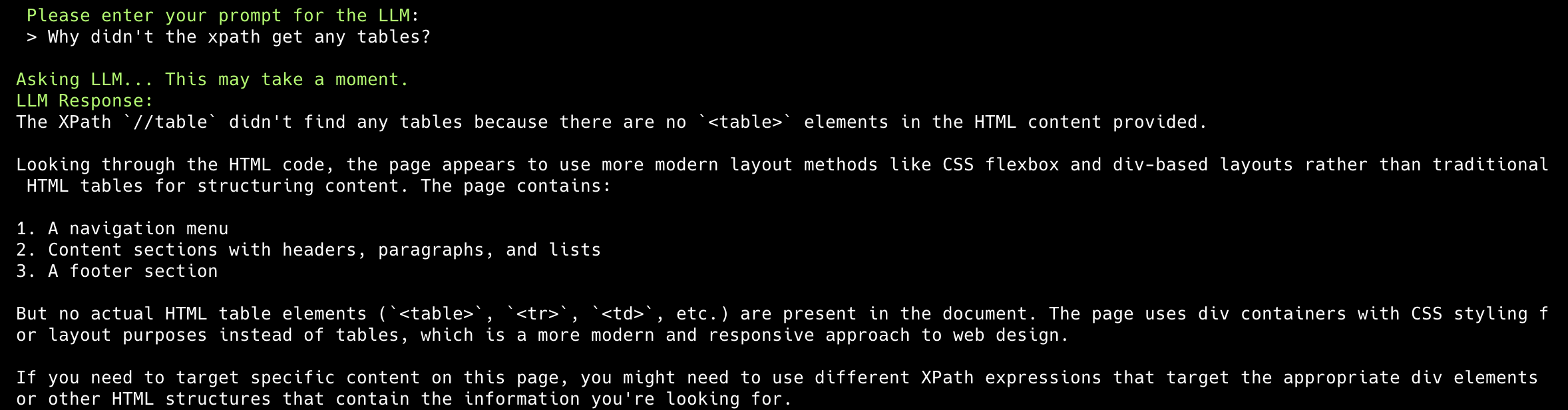

One of the most fascinating things for me in using LLMs is how you can more quickly switch between tasks with LLMs. For instance, I have written a lot of XPath and regexes over the years, but moving from a totally different task to writing an XPath (and having to remember the conventions, syntax, etc) is always a bit jarring. LLMs make this sort of thing far faster.

So LLMs can be used to generate structures that it can create far faster than you (probably) can. This can also help with cost (LINK) since if the LLM defines the structure then you can then apply that structure far more efficiently (like the LLM defining an XPath and then you applying it against the cache of 100,000 URLs, rather than having the LLM directly get that information for each of those URLs). Some examples of structures that LLMs can generate quickly:

XPath or Regexes, for extracting content in an efficient manner.

Spreadsheet or Chimera formulas, for calculating fields.

Database queries, from plain old SQL to Cypher (for graph database queries).

Scripts to do one-off tasks.

Here's an example where I used an LLM to debug an XPath against some content:

New ways of interacting

The vast majority of literature on content inventorying and analysis presumes working in a spreadsheet. LLMs open up a new way of interacting with our analysis, by being able to chat in plain English or other languages. For content analysis, some of the useful characteristics of chat include:

Not having to remember the details of how to use different UIs at all: just ask to draw a chart and it's drawn

The LLM doing the "context switching" for me, where I can switch between semantic search and drawing a chart without a lot of thinking about it (no need to remember what screen to go to for semantic search or whatever)

Making sure to feedback to the user which fields were used for that confirmation that the LLM did the right thing

The ability to leverage the metadata-about-the-metadata to drive the chat (for instance, if you have knowledge about all the fields in your analysis, then you can pass that to the LLM for a higher quality response).

The ability to leverage existing structures, such as just building Chimera charts (that

Thoughts? Observations? Questions? Complaints? Please reach out on LinkedIn or by my contact form.